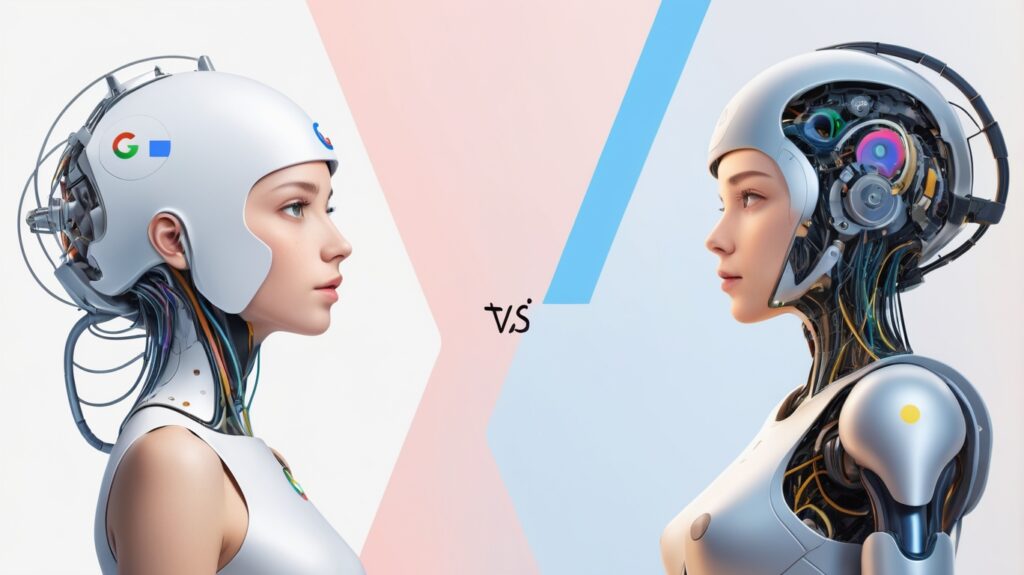

The world of artificial intelligence is moving at breakneck speed. Tech giants are locked in a fierce competition to develop the most powerful and versatile large language models (LLMs). Two recent contenders vying for dominance are Google’s Gemini 2.0 Flash with Thinking and OpenAI’s o1. This post delves into a detailed comparison of these two impressive models (Gemini 2.0 Flash Thinking vs. OpenAI o1), exploring their strengths, weaknesses, and real-world implications.

Table of Contents

ToggleGoogle Gemini 2.0 Flash Thinking: Speed and Smarts Combined

Google Gemini 2.0 Flash Thinking represents a significant leap forward in LLM technology. Its “Flash” technology boasts incredibly fast response times, making it ideal for applications requiring immediate feedback. The “Thinking” mode, a key differentiator, enhances its reasoning capabilities, allowing it to tackle complex problems and deliver more nuanced responses than its predecessors. Gemini’s multimodal capabilities are also noteworthy; it can process and generate both text and images, opening up a wide range of potential applications. Its seamless integration with other Google services, such as Search and Maps, further enhances its utility. While currently in an experimental phase, initial benchmarks suggest impressive performance, though comprehensive, independently verified data is still emerging.

The experimental nature of Gemini 2.0 Flash Thinking is important to acknowledge. While its capabilities are promising, it’s crucial to remember that it’s still under development, and its performance may vary across different tasks and contexts. Further testing and refinement are expected before it reaches full maturity. Google’s commitment to continuous improvement suggests that future iterations will likely address any current limitations.

OpenAI o1: A Strong Contender in the LLM Arena

OpenAI’s o1 is another powerful LLM known for its robust reasoning abilities. While perhaps not as fast as Gemini’s Flash technology, o1 compensates with its sophisticated understanding of complex queries and its ability to generate detailed and coherent responses. OpenAI has a strong track record in developing advanced LLMs, and o1 builds upon this foundation. However, specific benchmarks comparing o1 directly to Gemini 2.0 Flash Thinking on a wide range of tasks are still relatively limited at this time, making a definitive judgment challenging.

One potential weakness of o1, compared to Gemini, might lie in its multimodal capabilities. While OpenAI is actively developing multimodal models, o1’s current focus appears to be primarily on text-based interactions. This could limit its applicability in scenarios requiring image or audio processing. This is, however, an area of rapid development, and future iterations may address this limitation.

Gemini 2.0 Flash Thinking vs. OpenAI o1: A Head-to-Head Comparison

| Feature | Google Gemini 2.0 Flash Thinking | OpenAI o1 |

| Speed | Extremely fast (Flash technology) | Relatively slower |

| Reasoning | Strong, enhanced by “Thinking” mode | Strong, known for detailed and coherent responses |

| Multimodal | Yes (text, image, audio & video) | Primarily text-based (currently) |

| Ease of Use | Generally user-friendly, integrated with Google services | Dependent on the specific API or ChatGPT interface used |

| Cost | Pricing details may vary depending on usage | Pricing details may vary depending on usage |

| Integration | Seamless integration with Google ecosystem | Integration depends on the chosen platform |

While Chatbot Arena has provided some comparative data, it’s important to note that benchmark results can be influenced by various factors, including the specific tasks used for evaluation and the methodologies employed. Therefore, these results should be interpreted cautiously and not considered definitive proof of superiority for either model.

Real-World Applications: Where Each Model Shines

Both Gemini 2.0 Flash Thinking and OpenAI o1 hold immense potential across various sectors. Gemini’s speed makes it ideal for applications requiring rapid responses, such as real-time customer service chatbots or interactive assistants. Its multimodal capabilities could revolutionize content creation, allowing for the seamless integration of text and images. OpenAI o1, with its strong reasoning skills, could excel in tasks demanding in-depth analysis, such as complex data analysis or scientific research.

The choice between the two models ultimately depends on the specific needs of the user. For applications prioritizing speed and multimodal functionality, Gemini 2.0 Flash Thinking might be the preferred choice. For tasks requiring sophisticated reasoning and in-depth analysis, OpenAI o1 could be a more suitable option.

Conclusion: The Future of LLMs Remains Open

The race between Google and OpenAI to develop the most advanced LLMs is far from over. Both Gemini 2.0 Flash Thinking and OpenAI o1 represent significant advancements, each with its own unique strengths and weaknesses. While Gemini’s speed and multimodal capabilities are impressive, o1’s robust reasoning abilities are equally compelling. The future will likely see further refinements and improvements in both models, leading to even more powerful and versatile AI tools. It’s an exciting time to witness this rapid evolution in the field of artificial intelligence.

In my opinion, the “best” model depends entirely on the specific application. Neither definitively “beats” the other; they excel in different areas. Further independent benchmarks and real-world usage will be crucial in determining the long-term dominance of either model.

We encourage you to share your experiences and thoughts on both Gemini 2.0 Flash Thinking and OpenAI o1 in the comments section below. What are your initial impressions? Which model do you find more impressive, and why? Let’s discuss! For those interested in delving deeper into the world of LLMs, we recommend exploring the official documentation from Google and OpenAI, as well as relevant research papers and articles available online.

FAQs (Frequently Asked Questions)

What is the key difference between Google Gemini 2.0 Flash Thinking and OpenAI o1?

- Gemini 2.0 Flash Thinking prioritizes speed and multimodal capabilities, making it ideal for applications requiring quick responses and the processing of various input types (text, images, potentially audio).

- OpenAI o1 excels in robust reasoning and generating detailed, coherent responses, making it suitable for tasks demanding in-depth analysis and complex problem-solving.

Which model is faster, Gemini 2.0 Flash Thinking or OpenAI o1?

Gemini 2.0 Flash Thinking is significantly faster due to its “Flash” technology, designed for rapid response times. OpenAI o1 is generally slower.

Can both models handle images or only text?

- Gemini 2.0 Flash Thinking can process and generate both text and images.

- OpenAI o1’s current focus is primarily on text-based interactions, although multimodal capabilities are under development.

How do I choose between Gemini 2.0 Flash Thinking and OpenAI o1 for my needs?

- Choose Gemini 2.0 Flash Thinking if: You prioritize speed, require multimodal capabilities (text and images), or need a model seamlessly integrated with Google services.

- Choose OpenAI o1 if: You require sophisticated reasoning, need detailed and coherent responses, or are primarily focused on text-based interactions.

Where can I learn more about these models?

I recommend exploring the official documentation from Google and OpenAI, as well as relevant research papers and articles available online.