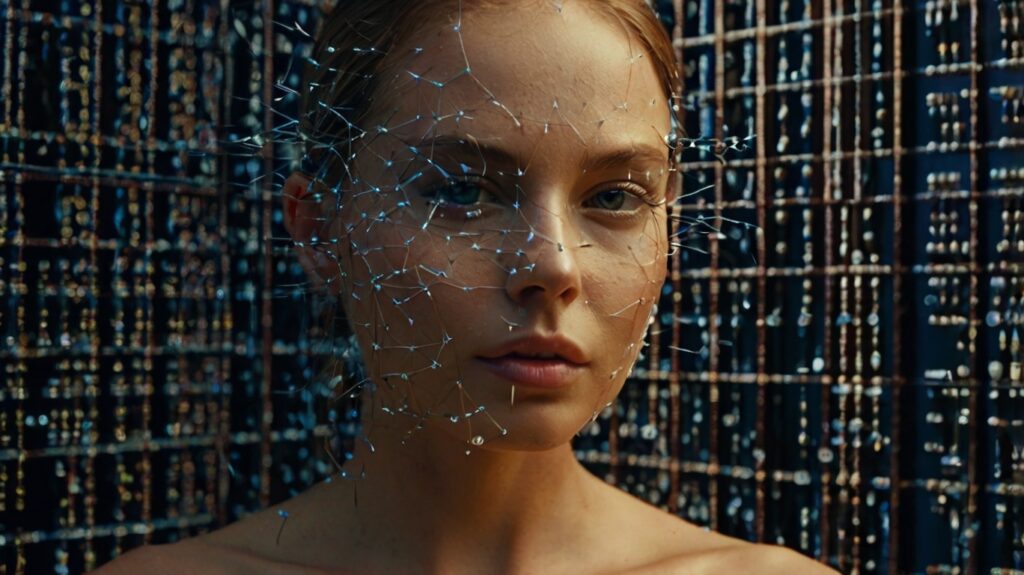

In the realm of artificial intelligence (AI) and machine learning (ML), embeddings play a pivotal role. They are essentially numerical representations of complex objects, such as words, images, or videos, that capture their essence and relationships with other objects. Embeddings serve as the foundation for various applications, including natural language processing (NLP), computer vision, and recommender systems.

However, as these applications become increasingly sophisticated and the volume of data they handle grows exponentially, so too do the challenges associated with embeddings. Traditional embedding techniques often result in large and unwieldy data structures that can be cumbersome to store, transmit, and process. This can significantly hinder the performance and scalability of AI and ML models.

Table of Contents

ToggleEnter Embedding Quantization: Shrinking the Footprint Without Compromising Performance

Embedding quantization emerges as a game-changing technique that addresses the challenges of storage and efficiency posed by traditional embeddings. It essentially involves reducing the size of embeddings by representing them with lower precision data types, such as lower-bit integers or floating-point numbers. This can lead to dramatic reductions in storage requirements and computational overhead, while remarkably preserving the accuracy and effectiveness of the embeddings.

Delving into the Structure of Embedding Quantization

There are two primary approaches to embedding quantization:

Scalar Quantization

This method quantizes each element of an embedding vector individually. It replaces the original high-precision values with lower-precision values from a predefined codebook. The codebook is essentially a collection of representative values that are chosen to minimize the quantization error, which is the difference between the original value and its quantized representation.

Binary Embedding Quantization

This more aggressive approach quantizes each element of an embedding vector to a single bit, either 0 or 1. This significantly reduces the storage footprint but requires more sophisticated techniques to maintain accuracy. Here, specific projections are learned to map the high-dimensional embedding space into a lower-dimensional binary space, preserving the essential semantic relationships between objects.

The Advantages of Embedding Quantization

Embedding quantization offers a compelling array of benefits that make it an attractive proposition for various AI and ML applications:

- Reduced Storage Requirements: By lowering the precision of embeddings, quantization can significantly decrease their storage footprint. This is particularly advantageous for large-scale models that handle massive amounts of data. It can lead to substantial cost savings in cloud storage and enable the deployment of models on devices with limited storage capacity.

- Faster Processing Speeds: Lower-precision embeddings require less computational power to process. This translates to faster training and inference times for AI and ML models, leading to improved overall performance and responsiveness.

- Enhanced Efficiency for Resource-Constrained Devices: Embedding quantization empowers the deployment of AI models on devices with limited computational resources, such as mobile phones and embedded systems. The reduced size and processing demands of quantized embeddings make them ideal for real-time applications on edge devices.

- Improved Scalability: By mitigating storage and processing bottlenecks, embedding quantization paves the way for the development and deployment of even larger and more complex AI models. This opens doors for advancements in various fields, such as natural language processing, computer vision, and recommender systems.

Limitations and Considerations of Embedding Quantization

While embedding quantization offers significant advantages, it is essential to acknowledge some potential limitations and considerations:

- Potential Loss of Accuracy: Quantization inherently introduces some degree of error, as the original high-precision values are approximated by lower-precision representations. The impact on accuracy can vary depending on the quantization method, the level of precision reduction, and the specific application.

- Finding the Optimal Quantization Strategy: The effectiveness of embedding quantization hinges on selecting the appropriate quantization method and precision level. This often involves experimentation and fine-tuning to achieve the optimal balance between accuracy and efficiency for a particular application.

- Potential Compatibility Issues: Quantized embeddings might not always be directly compatible with existing models or libraries that were designed for high-precision embeddings. This may necessitate adjustments or adaptations to ensure seamless integration.

Pricing and Cost Considerations

The cost associated with embedding quantization is primarily indirect. There are no specific licensing fees or charges for using the technique itself. However, the cost benefits of quantization materialize through:

- Reduced Storage Costs: By minimizing storage requirements, embedding quantization can lead to substantial savings in cloud storage expenses. This is especially beneficial for large-scale applications that handle massive datasets.

- Lower Computational Costs: The faster processing speeds enabled by quantized embeddings can translate to reduced computational costs, particularly when running models on cloud-based infrastructure.

- Improved Model Deployment Efficiency: The ability to deploy quantized models on resource-constrained devices can eliminate the need for high-powered hardware, leading to cost savings in infrastructure and energy consumption.

Real-World Applications of Embedding Quantization

Embedding quantization has found applications in a wide range of domains, including:

- Natural Language Processing (NLP): Quantizing word embeddings can significantly reduce the memory footprint of language models, enabling faster and more efficient text processing tasks.

- Computer Vision: Quantizing image embeddings can accelerate image search, object detection, and image recognition applications, making them more suitable for real-time use cases.

- Recommender Systems: Quantizing user and item embeddings can improve the performance and scalability of recommendation engines, leading to more accurate and personalized recommendations.

- Search Engines: Quantizing search embeddings can enhance search speed and efficiency, enabling faster retrieval of relevant results.

Conclusion

Embedding quantization emerges as a powerful and versatile technique for optimizing embedding-based applications. By reducing storage requirements, accelerating processing speeds, and enhancing efficiency, quantization enables the development and deployment of more scalable and performant AI and ML models. While it’s essential to carefully consider potential limitations and trade-offs, embedding quantization holds immense promise for transforming the landscape of AI and ML.

References

Binary and Scalar Embedding Quantization for Significantly Faster & Cheaper Retrieval

FAQs (Frequently Asked Questions)

It is a technique used to reduce the size of embeddings by representing them with lower precision data types. This leads to significant reductions in storage and computational requirements while preserving the core information of the embeddings.

There are primarily two methods:

- Scalar Quantization: Each element of an embedding vector is replaced with a lower-precision value from a predefined codebook.

- Binary Embedding Quantization: Each element is converted to a single bit (0 or 1), drastically reducing size but requiring more complex methods to maintain accuracy.

- Reduced storage requirements

- Faster processing speeds

- Enhanced efficiency for resource-constrained devices

- Improved scalability

- Potential loss of accuracy

- Finding the optimal quantization strategy can be complex

- Potential compatibility issues with existing models

It finds applications in various fields:

- Natural Language Processing (NLP)

- Computer Vision

- Recommender Systems

- Search Engines

While there might be a slight decrease in accuracy due to quantization, the overall performance improvement in terms of speed and efficiency often outweighs this trade-off.

Direct costs for it are minimal. However, the benefits translate to cost savings in storage, computation, and infrastructure.

Unlike techniques like PCA, it doesn’t reduce the number of dimensions but instead reduces the size of each value within the embedding.